Introduction

We tend to think of autonomous vehicles solely as machines. The progression of technology made it possible to have cars that drive themselves in a, more or less, sophisticated manner. As it is the case with a lot of increasingly complex technologies, the general public has a somewhat narrow and tilted understanding of the mechanisms and workings of such vehicles. Cars and humans share a common ground though: Traffic. Within cities, streets, parking lots, etc. humans and autonomously driving vehicles need to interact in some way. This means that not only is the car required to recognize and evade a human being, but also the human needs to be made aware of a car that is driving on its own. The former is usually achieved through software, whereas the latter is not always made clear.

Self-Driving Cars situate themselves in their environment using cameras and various sensors. In an anthropomorphized way they need to be aware of their surroundings. When they encounter us humans, we necessarily form data-points within the software’s reasoning. In this essay I will examine the role of the Pedestrian within the context of Autonomous Vehicles. I will give a theoretical background on the technology used and then focus on various forms of testing-situations, including those that happen involuntarily and publicly. The guiding question being: What constitutes the interaction between autonomous vehicles and pedestrians?

* * *

Main

Theoretical Foundation

To establish a foundation for further analysis, we need to sum up the array of a car’s sensorium. From the most basics, like the fuel-gauge, to advanced LiDAR-technology, a car’s sensors allow for a more convenient driving experience. Cars could, practically speaking, work solely with an engine and an empty dashboard. Anything else is added either for safety reasons, or convenience. The majority of a vehicle’s sensorium allows a car to see its surroundings. We will therefore frame the following analysis in the light of a ‘car as a seeing machine’[1].

The Sensorium of Modern Cars

The evolution of modern cars coincides with the development of modern computers. Vehicles that are manufactured in the 21st century rely to a much larger proportion on software, than their e.g. 1980s counterparts. There are some sets of sensors that remained relatively unchanged since the beginning and others that saw considerable changes over time.

The sensorium of regular cars almost always features the classic vehicle dashboard with speedometer, fuel-gauge, oil temperature and sometimes r.p.m.-counter. They are usually taken for granted, but since they initially were designed for a specific reason, we can be sure that they serve a pragmatic and simplistic way of assessing the vehicles basic values.[2]

Since there are near infinitely many ways to measure anything in the real world, the number of sensors in cars steadily increased. From tire pressure, over rain sensor, automatic headlights, park-distance-control, etc. a multitude of factors can now be taken into consideration when operating a vehicle. The former set of sensors required an active observation by the user, whereas the latter only alerts the driver, when the measurements cross a certain threshold (for example distance to an obstacle while parking).

Agency

With the increasing capabilities of software, algorithms and quasi-autonomous systems in cars, a fundamental question changed: What about the agency of the car? In the standard scenario of a human driver involved in regular and basic traffic situations we could always be assured that the human is in control. After all, he/she is the one behind the steering wheel and the one making decisions. But after the introduction of software into the machinery, the landscape changed slightly.

Automatic systems like Emergency Braking, Lane-Assistant, Blind-Spot-Detection, all feature the ability to override human commands to a certain extend. Those overrides aim to protect the passengers against potentially harmful situations and tend to react faster than a human.[3]

The case shifts completely, when we talk about autonomous cars. It is no longer an asset that the car features automatic systems – it is the requirement for basic functionality. To give a car self-driving capability means to equip it with the necessary bits of software to make autonomous decisions. The agency shifts from the driver to the operating system. Of course, the driver should be at all times alert and interfere if necessary; meaning a software must allow manual overrides by the driver.

Technologies

All the comforts of modern-day driving are made possible by several technologies, some of them developed in the US-Military at some time.[4] We can classify the different types of sensors as active and passive. Passive sensors track changes in their range of perception (e.g. a camera), whereas an active sensor uses some kind of medium to gather information about the immediate surroundings (e.g. Radar).[5]

RADAR

Radar is short for radio detection and ranging and utilizes radio waves to detect objects. The process works with obstacles reflecting the invisible waves, which in consequence allows for a detailed analysis of the environment. Radar is low resolution and was initially developed for aerospace and nautical applications, since planes and ships move much slower and more predictable than cars. Radar for vehicles comes in two forms of optimization, short-range for objects within 30 meters and long-range for objects up to 250 meters away.[6]

Ultrasound

Ultrasound operates on the same principle as Radar, just with different waves. Soundwaves beyond the human hearing capability are sent out and their latent reflection is measured. Their operation is similar to sonar, that is used, for example, by bats or dolphins.

Infrared

Infrared sensors use light-waves below the human perception, to assess environments with low natural light. Active IR-Sensors send out electromagnetic waves and detect their reflection, whereas passive IR-Sensors pick up the infrared light that is radiated from objects, usually in the form of heat.

LiDAR

The similarity of the acronym to Radar hints at the technology of LiDAR (Light detection and ranging). Instead of radio waves, LiDAR uses (near) visible light and infrared. LiDAR is among the most advanced technologies, since the radiation of electromagnetic waves roots on laser-technology. It was first developed in the early 1970s by the US Military and NASA to cartograph the surface of the moon.[7] In contrast to the other forms of sensors does LiDAR allow for a 3D-Rendering of the objects detected, a much more advanced approach when thinking about environment awareness. The effective range is up to 250 meters with modern sensors.[8]

The Quest for Autonomy in Cars

The idea that cars could potentially drive on their own is almost as old as the underlying technology itself. This is no wonder, since the modern car was initially a replacement for a horse-led carriage. Given cognitive power and proper training of horses, they could, in theory, drive carriages themselves to a destination.[9] The complexity of the world is of course too much for this to make it in any way feasible, but the idea remained. Since we already substituted horses with cars, we might retain the ability to navigate the world autonomously.

One notable example is an experiment that was run in 1987 on the Universität der Bundeswehr in Munich. Professor Ernst Dieter Dicksmanns[10] and his team equipped a Mercedes Dudo with a computer and a camera, and had it run the distance from Mannheim to Pforzheim autonomously.[11] The camera was able to rotate over two axis (left/right, up/down) and the signal was processed on an onboard computer. It is notable, that the team relied on software that reacted to the environment and dismissed the idea of accurate map-data. Since this was before the public availability of the GPS-System and eleven years before the founding even of Google,[12] the options were limited.

The test-course was a part of the newly built highway A5, before it was opened to the public. This limited the influences of other participants in traffic, but it also implicitly showcased the restrictedness of the system. A test in the wild (meaning a public Autobahn) was never conducted and after the experiment the computerized car was transferred to the German Museum in Munich, where it is still on display.[13]

Navigation in the real World

Navigation in the real world is sometimes challenging for human drivers. Equipping a car with full autonomous driving capabilities remains the holy grail within the automotive industry.[14] To orient itself within the real world, a car can utilize several tools. We will largely exclude software in this analysis and assume that the underlying programming of a sensorium and the machine-to-machine communication just works.

There are two separated camps, when it comes to the sensorium used in autonomous driving. Tesla focuses on a vision-only approach that uses camera images as its main form of input,[15] whereas almost anybody else features LiDAR-Sensors, combined with short-range Radar.

As with training the software that enables the autonomous driving, we see a similar chasm as in generative AI – symbolism and connectivism. In short, symbolism focuses on reasoning by logic and first principles, whereas connectivism favours an approach via machine learning and artificial neural networks.[16]

There is the notion that enough input (training) data will eventually and inevitably produce a general intelligence – tailored to operating vehicles. Aside from the sensorium (e.g. Camera vs. LiDAR) there is not yet consensus on the best approach to training and testing the software.

The media scholar take on this is likewise a nod to “The map is not the territory”, a dictum by Alfred Korzybski.[17] Even though the idea roots in General Semantics, we can find an analogy here. Any attempt to find suitable software to map the real world remains just that: A map. Therefore, we teach cars simultaneously to navigate a virtual map, concluding in navigating a real-world map.[18]

Levels of Autonomy (SAE)

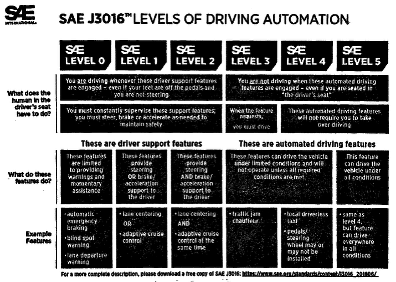

To understand the relationship of autonomous vehicles and pedestrians, we will examine different forms of autonomy. This standard follows the classification proposed by the Society of Automotive Engineers (SAE) in 2021.[19] It features six different levels (Level 0-5) that can be clustered into various levels of autonomy. The first three are systems designed to support the driver, whereas the Levels 3 to 5 are features of automated driving, including full autonomy.

Level 0

Basically, any car on the roads today is a Level 0 (autonomous) car. Basic features include mostly convenience applications such as Park-Distance-Control, Blind-Spot-Detection or Lane-Departure-Warning. These features are easily compatible with human capabilities and alertness. Simply put: It is possible to park without Park-Distance-Control, it just makes it more convenient.[20]

Level 1

Most of the new cars that are produced in, or shipped to the EU are Level 1 (autonomous) cars. Features on these kinds of cars include, for example, Lane-Centering or Adaptive-Cruise-Control. It also includes safety features like emergency braking/acceleration. Given all convenience it is legally required to keep the hands on the steering wheel, so this level has been dubbed hands-on.[21]

Level 2

A Level 2 (autonomous) car allows for multiple features to be engaged at the same time. Most of this is done via a considerable amount of software. For example, a lane-centering assistant can work together with adaptive-cruise-control and emergency braking – essentially leaving the driver in a more observing position, than a driving one. The functionality is designed to give the driver a momentary hands-off mode, without losing control over the vehicle.[22]

Level 3

More advanced systems are built into Level 3 (autonomous) cars, which can operate quasi-autonomous in narrowly defined situations. Those include traffic-jams-assistants (called a chauffeur), or assistant lane-change. The driver remains in control, but the features are designed to that he does not have to always watch the road. Therefore, this level is dubbed eyes-off.[23]

Level 4

All above-described level showed a clear hierarchy of driver to car (software). Level 4 quasi-autonomous cars are designed to use all commonly driver-designated functions (braking, acceleration, turn-signals). These vehicles are capably of working on their own but require a human as back-up. The driver can be inattentive at times and the level is called minds-off. It posits a problem, though. If the situation requires human intervention, then the driver must focus on the situation in an exceptionally short period of time and handle what the machine cannot. Calling it minds-off is therefore somewhat misleading and merely points to the capabilities of the car.

Level 5

At the final level the brackets of autonomous cars can be dropped. The human in the driver seat is reduced to a passenger and a steering wheel is optional.[24] All driving functionality is handled by software. These vehicles can drive on their own and handle traffic situations under all conditions. They are usually equipped with a (tailored) artificial general intelligence[25] that handles the different scenarios.

Testing Situations

With the rise of advanced driving assistance systems (like automatic breaking, collision aversion, etc.) and an increased number of autonomous vehicles, the lines have become blurry, where a testing situation ends, and the real world begins. Most training data that, for example, Tesla utilized to develop Full-Self-Driving (FSD) allegedly came from Tesla costumers and their cars’ built-in dash-cams.[26] Likewise the ideal testing ground is apparently not an artificial training course, but the real world as such.

Surely, this results in a number of curiosities and sometimes dangerous situations. An example, given by Philippe Sormani, illustrates that a tested Smart Shuttle on a trade fair event, tended to stop at a particular spot on the premise, waiting for a few seconds before moving on. It was unclear, why the vehicle was behaving like that, but the fact that it always resumed the course was enough to not inquire it any further.[27] The operator (driver) remarking “here [it] does each time the same [thing] to us.” [28]

What Constitutes a (Testing-)Situation?

An autonomous vehicle operates in the real world in relation to other actors and its environment. The concept of environment frames the constitution of a situation. We can define a (testing)-situation as the cross-influence of different actors within an environment. The public space is such an environment, equipped with machines, humans and animals as actors and various (technological) artefacts in each, interchangeable context. Environments differ from constructed (testing)-situations in their fluctuating characteristics. As French scholar Louis Quéré put it:

[A]n environment in itself has neither axes nor directions since we are the ones who set them in different ways; these settings give rise to an ‹environing experienced world›. […] It is the orientation of experience that gets one from the environment to the situation, because situations come under the register of the organization of experience, which is not the case of environments. Someone who is disoriented is still in an environment.[29]

A notable example on how a testing-situation is constructed happened in Coventry, UK, in November 2017. Even though a testing situation was publicly announced, the test itself appeared rather artificial. Traffic cones, designated routes and lanes, and the presence of police drew an image of constructed-ness.[30] The public announcement read:

The UK’s largest trial to date of connected and autonomous vehicles technology on public roads explor[es] the benefits of having cars that can ‹talk› to each other and their surroundings – with connected traffic lights, emergency vehicle warnings and emergency braking alerts. The vehicles rely on sensors to detect traffic, pedestrians and signals but have a human on board to react to emergencies. The trials are testing a number of features and most importantly seeking to investigate how self-driving vehicles interact with other road users.[31]

Sormani remarked on the rather primitive capability of such a test. The used AI-System was unable to coordinate in situ with other traffic participants, he remarked. This impression was an artificial and constructed situation that showed more of an adjustment to an AI-system, then the tailoring of an AI towards reality.[32]

He concluded that the introduction of artificial intelligence into society requires the modification of the societal environment to the extend the differentiation between artefact and environment (after L. Quére) becomes obsolete.[33] Sormani argues that the modification of environments (streets and urban areas) bears the danger of being either tailored towards machines or towards humans. Favouring one over the either might result in disorientation (machine) or confusion (human). We cannot undoubtedly argue that a machine favoured world will necessarily be easy to navigate by people.[34]

Social Deficit

The behaviour of current AI-Systems is characterized by a vast amount of training data and a comparatively little amount of social intelligence. This is the verdict of N. Marres and P. Sormani, after examining the behaviour of autonomous vehicles in cities. Actors in regular traffic are not only part of a large data set, nor are they particularities of the environment – they form part of a societal public sphere.

An AI-model that allows for the navigation of a vehicle in an urban area should therefore not only recognize signs, traffic lights and road markings, but also account for pedestrians as actual people. This appears to be yet a human characteristic, as various in-the-wild-tests have shown.[35] Marres and Sormani argue that AI-driven vehicles still operate by statistical rules. Everything is reduced to data. They argue:

“[T]hey still do follow rules, rules of the road, but probably more fundamentally statistical properties, based on experience. And the way we learn these rules is through … as children or as young adults … is observing the patterns of how vehicles move. And what we are implicitly learning is physics.[36]

Even though we follow social rules in human-to-human interaction, those rules are difficult to put into software. Human interaction is also improvised, highly situation dependent and unpredictable. This not only accounts for the quirky situations of elderly people crossing a road, before turning around in the middle. It reflects the differences in nature by machines and humans.[37]

Context Dependence

On marker of a successfully operating AI is the ability to adapt to different situations in various contexts. This flexibility can be one indicator of intelligent behaviour. If a system lacks this ability and reacts overwhelmed (anthropomorphising on purpose), the test is assessed as failed. AI systems in cars need to be able to interact with societal artefacts, in the socio-ecological sense. Their virtuousness to assess the artefacts as either living beings, objects of interaction or evasion, or parts of the environment will determine the successful implementation of an AI-System within a vehicle. Any failure in one of those three categories results in potential cataclysmic events and endangers pedestrian safety.[38]

It is obvious that current AI-models are still far from perfect which makes testing almost inevitable. Testing in the real world bears an opportunity to assess the interplay of artefact (in the Quéré-ian sense), environment (in the earlier defined way) and context (in the above-described sense). Two approaches of optimization arise: The environment is altered, so that AI will work more seamlessly which may result in human confusion. On the other hand, the AI is trained excessively on more and more training-data so that intelligent behaviour emerges from statistical data and the possibility of a crashing software is minimized.

Both approaches showcase implicitly that objects (artefacts) in the real world are usually subject to interpretation. Pedestrians and other actors in traffic are classified data-points for a software, yet a human observer immediately identifies them within a cultural context. As Sormani put it, the socio-cultural artefacts in traffic are always “embodied [and], entangled, never pure, never alone”[39]

He adds that any given experiment is at first subject to testing isolated parameters and therefore a constructed testing-environment is necessary. That such testing scenarios temporarily alter and disturb normal traffic does not pose a problem, in his eyes. Even a disturbed social situation is a social situation after all. On a meta-level this showcases the handling of not only autonomous vehicles, but of environment modifications by the participating public and district authorities.[40]

Upon being prompted the question: Do we have a situation? Sormani answers:

No, insofar as we have a situation deficit on the way that AI is conceptualized, implemented and debated – contrary to the belief that machine learning systems are able the learn context-depended.

Yes, insofar as that the introduction of AI into society evokes critical situations, public and political, that are under-represented in public discourse.[41]

Situations of cross-influencing actors?

Participants in traffic are usually entities with a direction, aim and speed (we include stationary objects as having a speed of zero). The interaction of such participants creates complications reminiscent of a three-body-problem in astronomy.[42] This means that at all times, there is a cross-influencing happening. We can regard this unique mixture of participants in each situation as a sociotechnical assemblage of artefacts in an environment.

Obviously, the testing of autonomous systems is imminent and necessary, such as it is the case with any other technological system. The complexity of especially autonomous vehicles calls for a wide variety of tests and of testing out different testing scenarios. It is sometimes useful to single out one actor (participant) of a situation to assess certain information. This can be done form the point of view of a vehicle, a pedestrian or something else entirely (e.g. a speed camera).

When tests in the wild are conducted the behaviour of pedestrians are less predictable, especially in crowed urban areas. Not all autonomous cars show indicators, when the cars are driving autonomously, which has an influence on pedestrians’ behaviour. Just as people behave differently in the presence of cameras, the movements, care and awareness of people change in the proximity of autonomous cars. This is an example of rather strong cross-influence. If people move so that an autonomous car could drive more easily, the car will “learn” that most pedestrians move in a particular carful way – tilting the statistical analysis on pedestrians’ safety.[43]

If there is no indication of autonomy then we get a much more realistic testing situation, since the cross-influence is reduced. Tests in the wild should therefore be a careful constructing of blind studies, even as double-blind studies. Pedestrians should, for realistic data, not be aware of a car’s autonomy, not should an autonomous car be ‘aware’ of other cars driving autonomously.[44]

Statistical data plays a huge role in the process of machine-learning when it comes to autonomous cars. Navigation along a specific, pre-determined route seems comparatively easy compared to the unpredictable randomness of crowed urban areas. To get clean statistical data is therefore essential to achieving reliable autonomy in software.

Pedestrian Safety and Interaction with Vehicles

Pedestrians belong to a category of special obstacles within the reasoning of autonomous cars. They are less predictable, comparatively small and very vulnerable and highly valuable. A lot of engineering about pedestrian safety is one of the most complicated aspects of autonomous driving. This includes collision aversion, ethical dilemmas (e.g. the Trolley Problem[45]), passenger safety and navigation in tight spaces. A huge part of resources within the testing of modern cars is dedicated to human safety. Passengers and drivers are protected by automatic braking systems and airbags. Design specifics like the steepness of edges and corners[46] insure a relative decreased vulnerability of pedestrians. Upon asking, an engineer replied to those kinds of situations:

We believe quite strongly that the complexity in driving on the roads, is not in observing where the road ends and the pedestrian crossing starts and where the traffic lights are, these static tasks of identification have been solved for a long, long time actually. The real challenge is modelling the behaviour of other so called agents, because they’re not necessarily totally rational or perfect or identical.[47]

As we have established earlier, a successful implementation of an AI works in all situations and circumstances. It should ensure pedestrian safety, when all else fails. Not only does that call for a software that is able to make very quick decisions based on predetermined values (ethical dilemmas), but it also calls for an intelligence that would also to act reasonably in situations where predetermination fails. In this line of argumentation, pedestrian safety is ensured when autonomous cars operate with a vehicle tailor general intelligence.[48]

Societal and Ethical Dimensions

Black Swan Events

Many interactions of autonomous vehicles and pedestrians are thought of a being representation of classes of scenarios. One example might be a pedestrian trying to cross a street between parked cars. Another might feature an elderly person on a crosswalk, changing her mind mid-way and turning around. But the real world is complicated, and no amount of planning can predict the randomness of everything. What if the car encounters an elderly person on one side of a one-way street, jumping at the car. A child on the rear, screaming and it suddenly begins to hail…

Those kinds of cases are called Black-Swan events, and their name suggests two things. They are (relatively or especially) rare, and in all unlikeliness they are possible. The name goes back to the (false) public believe in ancient antiquity century that only white swans exist.[49] One black specimen was then enough to disprove this theory: hence the name. It is an almost mathematical approach to theory and practice of autonomous driving. We can try to foresee all possible scenarios, but there will most likely be a black swan.

Public Perception and Trust in Autonomy

How can we trust in autonomous vehicles’ safety features? And respectively, why do we trust human drivers in the first place. Adam Millard-Ball asks (and answers) these questions in his paper on Pedestrians, Autonomous Vehicles, and Cities.[50] After all, the multitude of factors that come into play when a situation becomes dangerous (for the car or the pedestrian) are far too many to be considered all at once.

Millard-Ball compares the relationship of pedestrians and cars to a stake-game of Chicken. The original idea is to do something daring, potentially fatal and see which player quits first. The game is done, for example, by children with bikes racing towards each other; likewise, by adults with real cars. The winner is the one, who swerves last. The real stake in the game: At one point the questions shifts from ‘win or lose?’ to ‘loose or risk fatal injuries/death?’. Of course, loosing is preferable to fatality.[51]

The same goes for the incidents where pedestrians and cars meet. Situations like crosswalks, intersections, suburban neighbourhoods, etc. When there are no designated ways for pedestrians to cross a road, the pedestrian must assume a basic level of alertness by the driver to stop in time or simply let the car pass. Even if there are designated spots for crossing a road, the pedestrian’s way to the other side is a leap of faith. A driver could run a red light, might be tired, intoxicated or distracted, or simply not reacting fast enough. Each of these factors might contribute to a situation’s fatality. It becomes the inverted-Chicken-Game, where one party expects the other to stop first.[52]

The above-described situation is a pedestrian crossing a road, when it is expected (e.g. a crosswalk or traffic light). Yet there are other situations where the crossing is starkly unexpected, a highway (Autobahn) for example. Spaces where pedestrians do not belong, for obvious reasons, are tricky. Not only is the normal driving speed much higher, but a human driver might not expect a pedestrian. It remains the inverted-Chicken-Game, but this time the driver might trust/expect that the pedestrians stop.

The focus shifts when autonomous vehicles enter the scenario. Machine-drivers (software) is not prone to be distracted, drunk, or intoxicated. Nor does it behave differently with for example different emotional states. At least in principle this holds true – several black-swan cases and weird behaviours excluded for the sake of the argument.

Given that a pedestrian knows that an approaching vehicle is operating autonomously, the person could, in principle, just step out on the street and expect the car to stop. Software wise, it is unable to run down the pedestrian, nor is it required to swerve into traffic and potentially cause an accident. This requires speed and distance from car to pedestrian to allow for a safe full-stop. Even with advanced technology there are laws of inertia at play.[53]

Does this mean that in a hypothetical future where nearly every car drives autonomously, the need for crosswalks is laughable and traffic lights for pedestrians obsolete? Car will evade humans at all possible costs, so people could crossroads anywhere at any times. A game of Chicken-out where the car always chooses to lose, for the sake of pedestrian safety.[54]

Legal and Ethical Dimensions

Currently legal ruling (especially in Germany) requires a driver to have at least one hand at the steering wheel at all times. This ensures that some of the above-described features of convenience are not taken for granted – after all, if the situation demands then the driver must be able to interfere within a fraction of a second.[55]

As of 2021 the German Government published a suggestion to a law (Gesetzesentwurf) that will alter this requirement, potentially after 2030. This is strongly connected to the actual progress in the field of autonomous vehicles and might be subject to change.[56]

In its current form the suggested law includes a number of requirements that need to be met for an autonomous vehicle to be declared road-legal in Germany. Cars should aim for a risk-aversive, minimal invasive and safety-driven behaviour. Systems shall be equipped with a safety mechanism that detects the limits of its abilities and if those limits are met, then the car shall independently come to a full stop at a safe spot.[57]

Aside from that the German Government, of course, requires autonomous cars to behave with a set of ethically approved and clear rules, that ensure pedestrian safety. The paper summarizes this under the following list:

a) auf Schadensvermeidung und Schadensreduzierung ausgelegt ist,

b) bei einer unvermeidbaren alternativen Schädigung unterschiedlicher Rechtsgüter die Bedeutung der Rechtsgüter berücksichtigt, wobei der Schutz menschlichen Lebens die höchste Priorität besitzt, und

c) für den Fall einer unvermeidbaren alternativen Gefährdung von Menschenleben keine weitere Gewichtung anhand persönlicher Merkmale vorsieht,[58]

Conclusion

What then constitutes the interaction between autonomous vehicles and pedestrians? An autonomously operating vehicle, be it in a testing scenario, or in a fully operational case-study interacts with pedestrians in cross-influencing situations. These situations are constituted by all actors (participants) within an environment in a specific context. The behaviour of autonomous vehicles his based on statistical data, software and sensor-input. The actions of pedestrians are highly unpredictable and dependent on aim, demographic criteria, crowded-ness and so on.

The relationship of pedestrians and cars is therefore a tense one that requires careful consideration of a multitude of factors. Some of them are easy to be determined (lanes and markings), whereas other are beyond rational predictability (the behaviour of crowds). Given the randomness of the real world potentially dangerous black-swan events might be disruptive to the cars functioning.

The open question in the relationship if people and autonomous cars remains, whether public infrastructures (streets, lanes, cities, car parks) are sufficiently equipped to accommodate humans and autonomous vehicles in a safe manner. Since the quest for autonomy is currently in the testing-phase, we will see considerable development in the short to medium-term future.

The interaction between pedestrians and autonomous vehicles will shift the agency of participants in traffic towards algorithms and machines. To ensure a relative public safety and create necessary trust in autonomous vehicles the development of a traffic-specific artificial general intelligence is almost necessary.

Bibliography

[AUTOS] | Sprenger, Florian (Hg.), Autonome Autos, Medien- und Kulturwissenschaftliche Perspektiven auf die Zukunft der Mobilität, Transcript Verlag, 2021

Halpern, Orit; Mitchell, Robert; Geoghegan, Bernhard, The Smartness Mandate: Notes towards a Critique, Essay, Grey Room 68, 2017

Susilawati, Susilawati; Wong, Wie Jie; Pang, Zhao Jian, Safety Effectiveness of Autonomous Vehicles and Connected Autonomous Vehicles in Reducing Pedestrian Crashes, Essay, Transportation Research Record, 2023

Hind, Sam, Infrastructural Surveillance, Essay, new media & society, 202

[SITUATION] | Marres, Noortje; Sormani, Philippe: KI testen. “Do we have a situation?”. In: Zeitschrift für Medienwissenschaft, Jg. 15 (2023), Nr. 2, S. 86-102. DOI: dx.doi.org/10.25969/mediarep/20064.

[CITIES] | Millard-Ball, Adam, Pedestrians, Autonomous Vehicles, and Cities, Journal of Planning Education and Research, Volume 38, Issue 1 doi.org/10.1177/0739456X16675674

Quere, Louis, The still – neglected situation?, Réseaux The French journal of communication 6 (2):223-253, January 1998, DOI:10.3406/reso.1998.3344

Strasser, Anna (Ed.), Anna’s AI Anthology – How to live with smart machines, xenomoi Verlag e.K., Berlin, 2024

Togelius, Julian, Artificial General Intelligence, MIT Press, 2024

Referenzen

[1] cf. [AUTOS] p. 167ff.

[2] cf. ibid. p. 170f

[3] cf. [AUTO] p.28

[4] ibid. p. 170

[5] ibid. p. 171

[6] cf. ibid. p. 171f.

[7] Wilcox, Philip, Vision Correction: Identifying the Best Way for an Autonomous Vehicle to “See” the World, Medium, medium.com/swlh/vision-correction-identifying-the-best-way-for-an-autonomous-vehicle-to-see-the-world-d633b96b31ac (20.03.25, 18:52)

[8] cf. [AUTOS] p. 173

[9] cf. ibid. p. 167 ff.

[10] Verdienstkreuz 1. Klasse für Pionier des autonomen Fahrens, Univ. d. Bundeswehr Pressestelle, www.unibw.de/home/news/2023/verdienstkreuz-1-klasse-fuer-pionier-des-autonomen-fahrens (24.03.25, 9:43)

[11] H.2.3 Van VaMoRs (1985 – 2004), Dynamic Machine Vision, www.dyna-vision.de/hardware-used/plants-controlled-autonomously/van-vamors-1985-2004 (25.03.25, 11:35)

[12] Google officially lists August 1998 as their founding date, See: about.google/company-info/our-story/ (31.03.24, 10:54)

[13] Sehendes Fahrzeug an das Deutsche Museum übergeben, Stephanie Linsinger Pressestelle Universität der Bundeswehr München,www.idw-online.de/de/news158964 (26.03.25, 09:52)

[14] Tesla Full Self Driving serving as an example on this front. See Tesla Model Y Owner’s Manual, www.tesla.com/ownersmanual/modely/en_us/GUID-2CB60804-9CEA-4F4B-8B04-09B991368DC5.html (26.03.25, 15:38)

[15] See TESLA Press release: Tesla Vision Update: Replacing Ultrasonic Sensors with Tesla Vision, www.tesla.com/support/transitioning-tesla-vision (26.03.25, 15:55)

[16] Strasser, Anna (Ed.), Anna’s AI Anthology – How to live with smart machines, xenomoi Verlag e.K., Berlin, 2024, p.252

[17] Taken from Korzybski’s book Science and Sanity (1933), see Alfred Korzybski, Wikipedia, en.wikipedia.org/wiki/Alfred_Korzybski (26.03.25, 17:07)

[18] [AUTOS] p. 239

[19] Described in Taxonomy and Definitions for Terms Related to Driving Automation Systems for On-Road Motor Vehicles J3016_201806, SAE International, See: www.sae.org/standards/content/j3016_201806 (13.04.25, 13:45)

[20] Cf. [AUTOS] p. 26

[21] Cf. [AUTOS] p. 27

[22] Cf. [AUTOS] p. 27f.

[23] Cf. [AUTOS] p. 28

[24] A potential product has been shown by TESLA under the label Robotaxi. See: www.tesla.com/we-robot (13.04.25, 17:52)

[25] A comprehensive discussion on Artifical General Intelligence can be found here: Togelius, Julian, Artificial General Intelligence, MIT Press, 2024, cf. p. 56

[26] A public debate about this is happening on Reddit under: Is Tesla’s entire fleet capturing and transmitting training data back to mothership?,r/TESLA, see: www.reddit.com/r/TSLA/comments/1ff3l25/is_teslas_entire_fleet_capturing_and_transmitting/ (29.03.25, 14:08)

[27] [SITUATION] p. 99

[28] Ibid. p. 99

[29] [SITUATION] p. 96, Original source: Quéré: The still – neglected situation?, 288, Herv. NM.

[30] Ibid. p. 94f. Original source: Ryan Tute: Driverless vehicle testing on public roads hailed as landmark moment, in: Infrastructure Intelligence, 24.11.2017, www.infrastructureintelligence.com/article/nov-2017/driverless-vehicle-testing-public-roadshailed-landmark-moment (22.4.2023), Herv. NM.

[31] [SITUATION] p. 94

[32] Ibid.

[33] Ibid. p. 95, originally German, paraphrased and translated by me.

[34] [SITUATION] p. 96

[35] cf. [SITUATION] p.94

[36] [SITUATION] p. 90, originally taken from an interview with an engineer. (2022/23)

[37] ibid. p.90f

[38] Cf. [SITUATION] p. 97

[39] [SITUATION] p. 98

[40] Cf. Ibid. p. 98

[41] Cf. [SITUATION] p. 102, Originally German, paraphrased and translated by me. Original italics.

[42] To be specific, this kind of n-body problem is meant for the analogy: Three-body problem, Wikipedia, en.wikipedia.org/wiki/Three-body_problem, (21.03.25 09:47)

[43] [AUTOS] p.238 ff.

[44] Ibid. cf. p.242 ff.

[45] For reference see: Trolley Problem, Wikipedia, https://en.wikipedia.org/wiki/Trolley_problem (28.03.25, 17:02)

[46] For example: Regulation No 17 of the Economic Commission for Europe of the United Nations (UN/ECE) – Uniform provisions concerning the approval of vehicles with regard to the seats, their anchorages and any head restraints, Official Journal of the European Union, See: www.eur-lex.europa.eu/legal-content/EN/TXT/HTML/?uri=CELEX:42010X0831(03) (28.03.25, 17:04)

[47] [SITUATION] p. 89, original Interview by Noortje Marres (2022-23)

[48] Cf. [AUTOS] p.235f.

[49] See for general explaination: Black Swan Theory, Wikipedia, See en.wikipedia.org/wiki/Black_swan_theory (14.04.16:35)

[50] [CITIES] see. Bibliography for full title

[51] [CITIES] p. 7f.

[52] Cf [CITIES] p. 8f.

[53] Ibid.

[54] Ibid.

[55] Cf. [AUTOS] p.29ff.

[56] Mentioned in [AUTOS] p. 28,

original source: Bundesministerium für digitales und Verkehr, Entwurf eines Gesetzes zur Änderung des Straßenverkehrsgesetzes und des Pflichtversicherungsgesetzes – Gesetz zum autonomen Fahren, See: bmdv.bund.de/SharedDocs/DE/Anlage/Gesetze/Gesetze-19/gesetz-aenderung-strassenverkehrsgesetz-pflichtversicherungsgesetz-autonomes-fahren.pdf (12.04.15:36)

[57] Ibid. cf. [PDF] p. 6f.

[58] Ibid. [PDF] p. 6, (quoted verbatim in German for reasons of accuracy.)